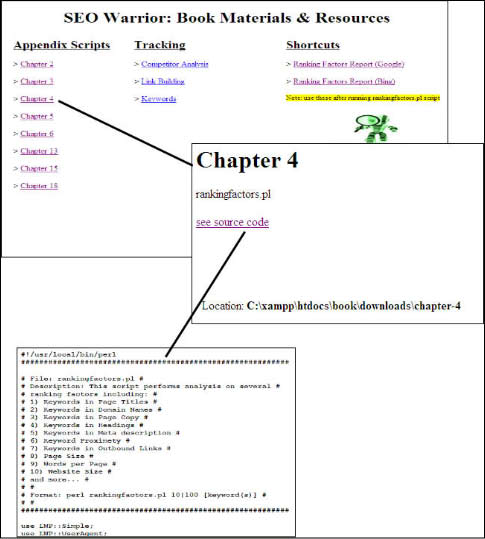

As promised, I have been working to incorporate all of the SEO Warrior scripts under XAMPP umbrella. Here are some of the things coming up in the next few days starting January 15th.

– integrated all book scripts into XAMPP for easy navigation & installation

(you would simply open http://localhost:10000/index.html to start navigation)

– several smaller bug fixes are in this release

– removed Yahoo! results from rankingfactors.pl script as Yahoo! will be using Bing

shortly

– added local PR checker script (htdocs/util/pr)

– video tutorials for SEO Warrior Dashboard and rankingfactors.pl script.

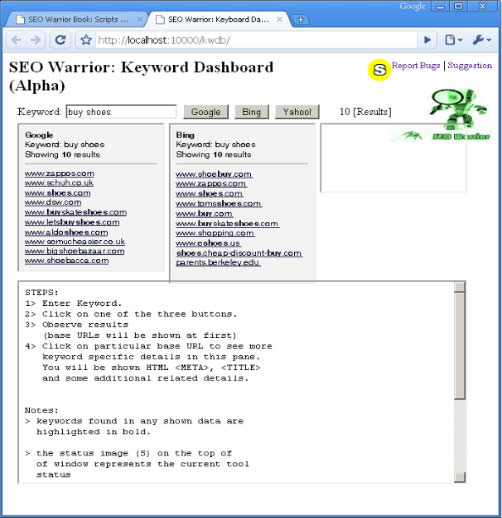

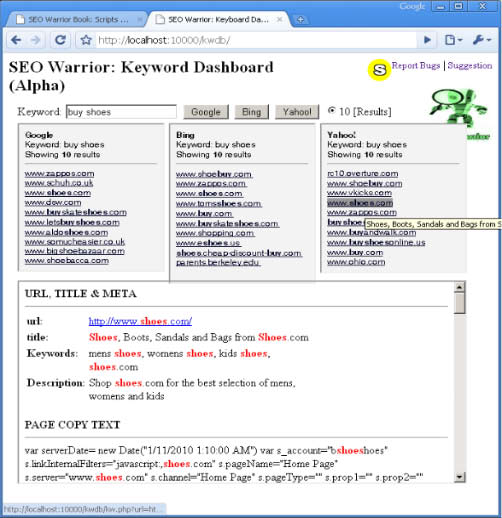

Here is a sneak peak at the SEO Warrior code XAMPP integration as well as the new look of the SEO Warrior Keyword Dashboard.

Thanks,

John

Tags: book, code, keyword dashboard, release, seo warrior

Bing is Microsoft’s solution to performing web searches on the Internet and, while relatively new, it holds promise as a major challenger to Google’s share of the market. This is especially the case since Microsoft joined in an agreement with Yahoo! Search to combine resources starting in 2010. The first question that those doing site search engine optimization (SEO) will ask is how to optimize for Bing. Optimizing for Bing is not drastically different than it is for the other major search engines but what makes it even easier is the rich set of tools provided for doing this task. Here are some of the tools provided for Bing SEO and how they facilitate the task of getting a site ranked in Bing.

Bing provides a site submission page similar to the other major search engines. Using the submission tool is free but you should not attempt to submit your site if it is already listed in Bing’s index. Plus, the site submission page requires to you enter a graphically presented code so as to discourage the use of automated submission scripts.

A user’s search experience can be enhanced with the Bing Web Page Error Toolkit. By default, when a web site cannot be found, a user receives a 404 redirect error page and nothing more. At this point, the user can either give up on the search or try to think of other search keywords that will be possibly able to locate the desired information. Microsoft provides a tool that allows developers to use the Bing search application programming interface (API) to return a page that gives helpful information to give the user other search options. The results are presented in the form of a related searches page and the API does the work of finding alternate keywords that could possibly be used to pinpoint the desired information. The results page can also be customized by the developer.

Side-by-side comparison tools are popular for checking SEO progress. If your site gets ranked at the top of a Google SERP, you will no doubt want to see how it is doing in Bing. There are several websites with side-by-side tools that allow you to enter a search phrase once and do a comparison of what both search engines return concurrently. It allows the SEO analyst to see what is working and make adjustments to what is not for a particular website.

The IIS SEO Toolkit from Microsoft provides a robust set of modules to make optimization tasks much easier. The modules provided are the Site Analysis Module, Sitemap Module, Site Index Module, and Robots Exclusion Module. Using all these modules, an SEO specialist can get an analysis of the current site they are optimizing, recommendations for improving ranking strategies, lists of site violations, and facilities for managing the robots.txt file and sitemaps.

Using the IIS SEO Toolkit Site Analysis tool, you can get comprehensive identification of common problems that work against optimization efforts. These are problems such as poor performance, broken links, and markup that is not valid. They are also referred to as violations. Analysis includes the detection duplicate content within the context of the analyzed website. Analysis results are also coupled with recommendations of how to correct any problems detected. There is also a query facility provided so that you have flexibility in providing a variety of comprehensive reports.

The IIS SEO Toolkit makes management of the robots.txt, sitemap, sitemap indexes easier. It is easier to manage the robots.txt file because you can get a logical view of your website in a graphical user interface and can make selections as to where a crawler is allowed and disallowed. Within the robots exclusion module, you can also add the sitemap location in order to specify how a crawler should access your URLs. Likewise, the sitemap and sitemap index modules allow easy editing and maintenance of these two objects that are critical effective SEO of a website.

Search engines including Google can and will retain information about your web searches. It is possible that this information could land itself to authorities in the US (or elsewhere) thanks to the Patriot Act. Here is a video of Eric Schmidt, Google CEO, basically affirming that your search privacy could be compromised.

There are many ways your can protect your privacy. You could do a search for ‘private surfing’ and follow the result links until you find a suitable tool or a proxy surfing site. You could install programs such as Private Proxy (http://www.privateproxysoftware.com/).

Many users prefer to use an online proxy site to protect their online privacy. PublicProxyServers.com provides a database of many good anonymous proxy sites. You can pick your proxy site sorted by domain, rating, country, access time, % of up-time, online time and last test. Once you pick a particular proxy site you might see some ads posted on the top of the page while browsing your sites of interest. Most of the proxy sites only have the top banner ad so your surfing experience should be relatively good if you can tolerate the top banner ad. Most if not all of the proxy sites found on PublicProxyServers.com work well with most if not all search engines, Facebook, Twitter, Amazon as well as other popular sites –effectively bypassing any firewalls forbidding access to these sites.

You could also create your own private proxy. There are several free scripts you can download. One of those is Glype. You can install (Glype) on a free host. There are many free hosts on the internet where you can run Glype. Using a proxy server such as Glype ensures that your browsing is completely anonymous. Your IP or destination URLs are completely hidden. Here is a video that shows how you can install Glype.

There are search engines that claim to completely respect your privacy. One of those is Cuil, which was started by former Google employees. Another search engine fully midful of your privacy is Startpage.com

Tags: internet privacy, internet proxy, online privacy, safe surfing

In the coming months I will be working with partner sites to bring you various SEO research including analysis of Google News, Google Images and others. As mentioned earlier I will be releasing numerous code updates to existing scripts (as time allows). All of the SEO Warrior code (Scripts) will always be free to use (GPL license).

During 2010, I will be posting SEO, SEM, and SMO tutorials, cheat sheets, webmaster tips and more. Some of this will be available to public and some only to SEO Warrior readers. Stay tuned for more information.

Wishing you Happy Holidays and all the best in 2010!

Cheers,

John

You can download the newest SEO Warrior Keyword Dashboard (XAMPP package) at this link.

This update fixes Bing issues.

Regards,

John

Tags: keyword dashboard, seo warrior, update

There is no doubt that the creators of Microsoft’s new search engine, Bing, are trying to create a user experience with improvements over the current market leaders such as Google. However, it is obvious that those improvements will take time to cultivate in order to improve significantly. So, for the most part, search engine optimization (SEO) for Bing is not much different than the others but those differences are subtle. This is advantageous because SEO experts will not have to adopt a totally different optimization process for Bing and can still draw upon their experience of what works well. The findings people have made as to what is important for Bing optimizations are mostly derived from side-by-side comparisons of search engine result pages (SERPs) with Google since the public does not really know Bing’s ranking algorithm. Here are a few important SEO considerations to make for Bing.

Your website should be complete and validated. Resist the urge to place a partially completed website online. All major search engines place a high priority on websites that are well-designed, complete, and have cross-browser compatibility—Bing included. You should be validating your code no matter what because doing so helps to ensure the same web presentation no matter what web browser a person is using. Visit the World Wide Web Consortium website for more information on validation.

Relevant and unique content is still just as important. However, some believe that content change rate is not as critical with Bing as it is with Google. For example, blogs are great for Google when there are entries made to them frequently. Google has always placed a greater weight on active and fresh sites. With Bing, the domain age of a site seems to be of greater importance thus fresh content has less of an impact. Content still should be relevant and written for the reader.

Backlinks are not weighted as heavily by Bing in an effort to combat link spamming. Google is well known for ranking a website based on the number of links pointing back to it. This fact has over the years been exploited by those practicing a Black Hat SEO technique known as link spamming or spamdexing. One method of link spamming involves filling a newly-purchased domain with nothing but links to the website getting optimized. Older and more authoritative websites do not usually practice these methods—otherwise they would have been removed from the Google index a long time ago.

Consider the concept of exact match when optimizing for Bing. Experts who have studied Bing with other search engines have noticed that while Google will use closely-related words to a keyword, Bing focuses on exact with the related searches along the left side of the SERP.

Keywords in link anchor text matters to Bing just as it does Google. This is because links that are crafted with important keywords for a website take time to create and are deemed as higher quality. Usually, with link spamming, links are put out as quickly and with as much volume as possible with little consideration for assigning keywords. Keyword anchor text is also thought to help the exact match criteria of Bing as well.

Download and install an SEO toolkit designed for Bing. One such tool is known as the Internet Information Services (IIS) SEO toolkit, a product of Microsoft. This toolkit allows you to check any website for problems that commonly cause ranking difficulties. The site analysis module of this toolkit can analyze a website for potential problems such as broken links and poor site performance. Another useful component of this toolkit includes a module to help edit the robots.txt. The robots.txt file is important for controlling how an indexing robot reads a site and defining which pages to exclude. For example, if a site has duplicate content among two or more pages, the robots.txt file can be easily edited to tell the robot to ignore the duplicates. All the editing is done through a graphical interface relieving the user of the need to remember the textual instructions.

Tags: bing, important, strategies

Read Chris Sherman’s reviews of the top SEO books of 2009 by clicking on this link.

Enjoy.

John

Tags: search engine land

Both Bing and Google have unveiled their real time search variants. Theoretically speaking real time search is impossible to achieve. In order for it to be possible is to say that you can do a search on any web page submitted in any given moment. What is possible is for search engines to index many popular social media sites, blogs, and news sites to produce what they call ‘real time search’.

One could argue that this is good enough to be labeled as ‘real time search’. The rules of real time search should be very much similar to the ‘regular’ search in a sense that keyword relevancy will be important. In order to fight spam, search engines will depend heavily on each site’s authority and trust.

The following video talks about Google’s ‘real time’ search.

Similarly, I’ve found the following video which goes over Bing’s search of Twitter.

Enjoy!

John

Tags: bing, Google, real time search, twitter

Organic search engine optimization (SEO) is full of challenges and getting top-ranked is not easy. Understanding of these challenges allows you to plan your SEO strategy much better because you will get a realistic picture of what to expect. Getting through the challenges are well worth the effort once you realize your website is at the top of the search engine result pages (SERP). What are some of the ways SEO specialists deal with these challenges? Let’s look at a few.

The number of competitors can often be astronomical. Many studies have been done in order to determine the number of top-level domains on the internet today. You can rest assured that you are not unique in your niche and you will be competing with countless others for the same top ranking on a SERP. The best way to deal with so many competitive sites is to make sure yours is up-to-date and well-maintained.

It is a challenge if not impossible to keep up-to-date with the various ranking algorithms among the major search engines. Search engines such as Yahoo, Google, and Bing are constantly reviewing their ranking algorithms and initiating new approaches within them. This need for constant review stems from the numerous black hat SEO specialists who try to circumvent the current algorithms in attempt to get a site ranked faster. One of the objectives of the major search engines is to remain fair and impartial thus they must combat the black hat SEO threat. You may consider yourself and expert in them today but it won’t take long before that expertise becomes antiquated.

Overcoming impatience is a challenge when waiting for results. Impatience can be troublesome when you consider the time it takes to have content written, get backlinks, contribute to forums, and do all the other tasks required in order to achieve truly organic SEO results. Probably what makes a person impatient is the fact that the search engines do not provide you any feedback as to what you are doing right or wrong when it comes to optimizing your site. No matter what, resist the urge to use black hat SEO tactics because you run the risk of getting your site penalized which means you will have to wait longer for ranking results.

If your SEO project is a group effort, consensus can be challenging. A large company will probably use a group to implement their internet marketing campaign. More than likely, this group will have representatives from the various departments. Depending on the size of the company, the group could increase in size meaning that there will undoubtedly be different opinions as to what will work in their SEO efforts. In a large group, there are various levels of understanding about SEO among its members. Some members with limited SEO understanding will have to be “educated” in order to contribute to the bigger picture. The best way to ensure a successful group campaign is to have a plan and hold team members accountable for their area of responsibility before embarking deep into the project.

Another challenge is finding the right websites to provide you with inbound links. Search engines favor inbound links that originate from reputable and relevant websites. But link building is always an uphill struggle. Consider that each quality inbound link is like a vote of confidence for your website so you want to get as many as possible in the shortest amount of time. However the other challenge is that you don’t want to get a high number of inbound links too quickly because a search engine might think you are trying to spam it.

Content is very important for ranking a website and their issues must be consistently dealt with. Duplicate content is one common issue that must be quickly eliminated or your website could get penalized by the search engines. Large businesses oftentimes have duplicate gateway pages among their affiliate websites so webmasters must make sure that the robots.txt file for their sites are configured with links for indexing bots to avoid (the duplicate content links).

As of last week, this blog is also available for mobile devices. Simply point your mobile device to http://seowarrior.net

Cheers.

John

Tags: Blog, mobile, seo warrior